BENEFITS OF PARALLEL COMPUTING IN REAL TIME APPLICATIONS

Introduction

Multiple computer processors are used in parallel computing to tackle various operations at once. Parallel architecture, as contrast to serial computing, can divide a task into its component components and multitask them. Modelling and simulating real-world phenomena is a good use for parallel computer systems.

Digital processes would’ve been tedious to say the least without parallel computing. Each task would need far more time if your iPhone or laptop could only perform one action at once. Consider the smartphones from 2010 to gain an understanding of the speed (or lack thereof) of serial computing. Serial processors were used in both the iPhone 4 and Motorola Droid. On your phone, opening an email could take up to 30 seconds, which feels like an eternity in the here and now.

Advantages of parallel computing

The benefits of parallel computing include faster “big data” processing and more effective code execution, which can save time and money. By utilising greater resources, parallel programming can also resolve more challenging issues. That is beneficial for applications ranging from enhancing solar power to altering the way the banking sector operates (Wang et al., 2019).

Modelling the Real World with Parallel Computing

Our circumstances are not dramatic. Events don’t take place sequentially, waiting for one to end before the next one begins. We need parallel computers to perform data analysis on data points related to the traffic, weather, finance, agriculture, industry, oceans, healthcare, and ice caps (Lepikhin et al., 2020).

Saves Time

Fast processors must perform inefficiently due to serial computing. It’s like using a Ferrari to transport 20 oranges at a time from Maine to Boston. No of how quickly one automobile can go, doing so is less efficient than combining the deliveries into a single journey.

Saves Money

Parallel computing reduces costs by speeding up processes. On a small scale, the benefits of resource use that is more efficient might not seem significant. However, when a system is scaled out to billions of operations—like bank software, for example—we observe enormous cost savings (Hockney & Jesshope, 2019).

Solve more difficult or substantial challenges

Technology is developing. A single web app may perform millions of transactions per second using AI and big data. Additionally, “grand problems” like protecting cyberspace or lowering the cost of solar energy will need for petaflops of computer power. Parallel computing will improve technological progress (Van de Ven & Tolias, 2019).

Leverage Remote Resources

Every day, people produce 2.5 quintillion bytes of data. The number 25 has 29 zeros after it. Those numbers are impossible for us to compute. Alternatively, is it possible? Numerous computers with multiple cores each can process many times more real-time data simultaneously than a single serial computer can by using parallel processing.

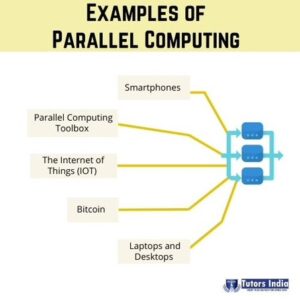

Examples of parallel computing

The fact is that parallel computers have existed since the early 1960s, even though you may be reading this article on one. They are as compact as the low-cost Raspberry Pi and as durable as the Summit supercomputer, which is the most potent machine in the world. Several illustrations of how parallel processing powers our environment is provided below.

Smartphones

The dual-core processor in the iPhone 5 runs at 1.5 GHz. the six cores in the iPhone 11 Eight cores make up the Samsung Galaxy Note 10. All these smartphones are instances of parallel processing.

Laptops and Desktops

Parallel computing is exemplified by the Intel® processors that run the majority of contemporary computers. The HP Spectre Folio and HP EliteBook x360’s Intel CoreTM i5 and Core i7 CPUs each have four processing cores. The HP Z8, the most efficient computer in the market, has 56 cores of processing capability, enabling it to do intricate 3D simulations or execute real-time video editing in 8K footage (Meniailov et al., 2020).

Bitcoin

The blockchain technology used by Bitcoin makes use of several computers to validate transactions. In the future years, blockchain will be used for practically all financial transactions. Without parallel processing, Bitcoin and the blockchain cannot function. The “chain” component of blockchain would disappear in a future of serial computing.

The Internet of Things (IOT)

The floodgates are open for our everyday data flow with 20 billion gadgets and more than 50 billion sensors. Traditional computing is unable to keep up with the flood of real-time telemetry data coming from the IoT, which includes data from soil sensors, smart automobiles, drones, and pressure sensors.

Parallel Computing Toolbox

Programmers can maximise the performance of multi-core devices with the help of MathWorks’ Parallel Computing Toolbox. Users of the Matlab Toolbox can handle big data jobs that are too complex for a single processor to accomplish.

Also, when considering parallel computing, image processing is developing more in the coming years. Image processing is the process of enhancing an image and obtaining important information from it. In many different applications, image processing is becoming more significant (Mochurad & Shchur, 2021).

What’s next for parallel computing?

Even if it’s incredible, parallel computing may have reached the limit of what conventional processors can handle. Quantum computers have the potential to greatly improve parallel computations within the next ten years.

Parallel computing advances significantly with quantum computing. Consider serial computing as doing one thing at a time. An 8-core parallel computer can perform 8 tasks concurrently. More operations could be performed simultaneously by a 300-qubit quantum computer than there are atoms in the universe.

For any assistance related to your Master dissertation writing, kindly contact Tutors India for the best service. We offer our full support for your master’s project and an effective completion of your research.

References

Hockney, R. W., & Jesshope, C. R. (2019). Parallel Computers 2. CRC Press.

Lepikhin, D., Lee, H., Xu, Y., Chen, D., Firat, O., Huang, Y., Krikun, M., Shazeer, N., & Chen, Z. (2020). Gshard: Scaling giant models with conditional computation and automatic sharding. ArXiv Preprint ArXiv:2006.16668.

Meniailov, I., Krivtsov, S., Ugryumov, M., Bazilevich, K., & Trofymova, I. (2020). Application of parallel computing in robust optimization design. In Integrated Computer Technologies in Mechanical Engineering (pp. 514–522). Springer.

Mochurad, L., & Shchur, G. (2021). Parallelization of Cryptographic Algorithm Based on Different Parallel Computing Technologies. IT&AS, 20–29.

Van de Ven, G. M., & Tolias, A. S. (2019). Three scenarios for continual learning. ArXiv Preprint ArXiv:1904.07734.

Wang, X., Feng, L., & Zhao, H. (2019). Fast image encryption algorithm based on parallel computing system. Information Sciences, 486, 340–358.

Previous Post

Previous Post